AMD ROCm on Windows Issues

Troubleshooting common issues with AMD ROCm on Windows

If you're looking for the Msty Studio documentation instead, you can find it here: Go to Msty Studio Docs →

Learn more about Msty Studio at Msty.ai →

Msty supports AMD ROCm on Windows out of the box and we even have a dedicated installer for it. However, sometimes things can go wrong and your GPU card might not be supported or detected at all. Here are some troubleshooting steps you can take to resolve the issue.

Get the latest version of Msty

We are constantly improving Msty and adding support for new GPUs. Make sure you have the latest version of Msty installed on your system. You can download the latest version from the Msty website.

Try a different installer

Instead of using the dedicated AMD ROCm on Windows installer, you can try using the generic Auto installer and see if that works for you. You can download the Auto installer from the Msty website. Give CPU only installer a try as well if nothing works.

Check if your GPU is supported

Not all AMD GPUs are supported by Msty (and Ollama, the underlying Local AI service ). Check here to see if your GPU is supported: Supported GPUs.

Check if your GPU is detected

cd into %appdata%/Msty from your terminal and run ./msty-local.exe serve to see if your GPU is detected and for

other useful information.

Multiple GPUs

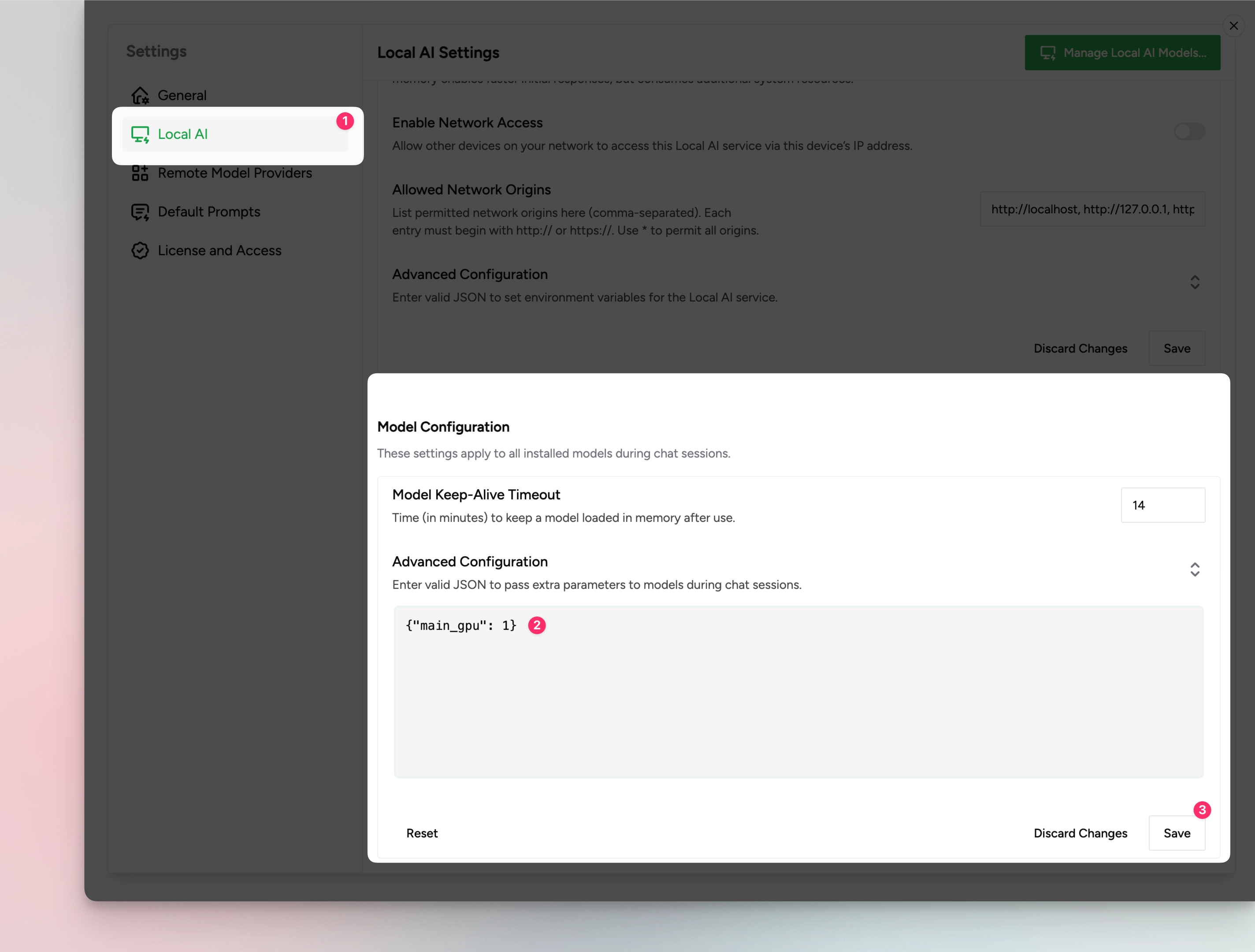

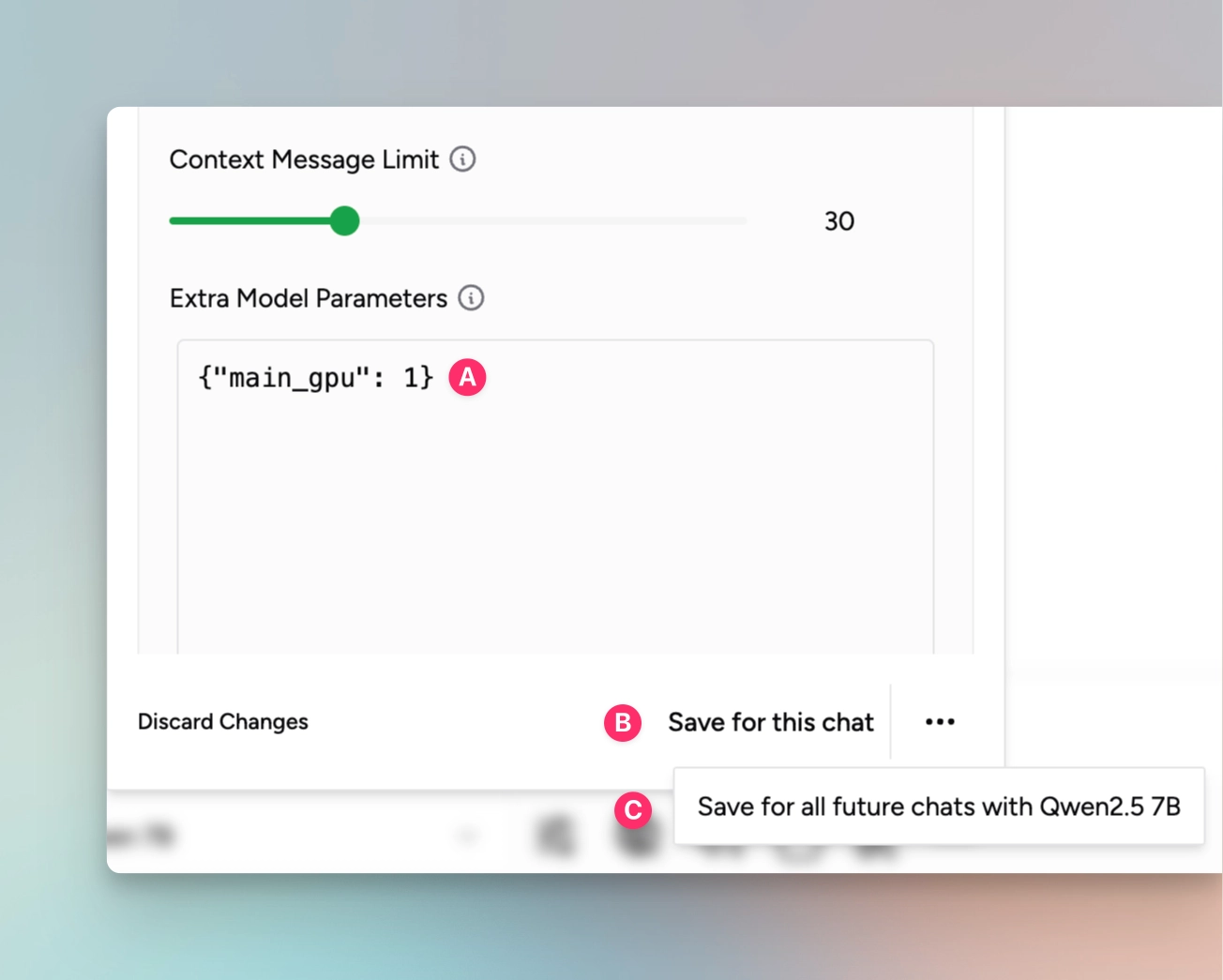

If you have multiple GPUs in your system, you can try passing main_gpu parameter to each model to specify which GPU to

use for that model. This can be set globally for all models in Settings -> General Settings -> Local AI >

Model Configurations > Advanced Configurations or per model in the model's configuration available when chatting

with the model. This value can even be set per chat.

Custom Ollama Build

If you have an unsupported GPU, or if you just couldn't get Msty to work with your GPU, you can try using a custom build of Ollama:

- From https://github.com/likelovewant/ollama-for-amd/releases, download the latest Ollama AMD zipped file

ollama-windows-amd64.7z. - Replace

%appdata%\Roaming\Msty\lib\ollamawith the downloaded archive (unzip it first). - Download the ROCBLAS packages for your GPU model from https://github.com/likelovewant/ROCmLibs-for-gfx1103-AMD780M-APU/releases.

- Rename

%appdata%\Roaming\Msty\lib\ollama\rocblas\librarytolibrary.bckas a backup. - Extract the library folder in the downloaded ROCBLAS packages to

%appdata%\Roaming\Msty\lib\ollama\rocblas\to replace the old library. - Run

./msty-local.exe servefrom%appdata%/Mstyto see if your GPU is detected and for other useful information. - Stop the server and restart Msty.

Also,

- Make sure that you have downloaded the latest version of Msty and the right installer for your gpu.

- Use a smaller model to make sure GPU is actually being used and detected. Once you are sure that GPU is being used, you can use larger models.

- Close the app and make check your activity monitor/ task manager to ensure

msty-localis not running. If it is, kill the process and restart the app. - Reinstall the app by first deleting

libfolder and ensuringmsty-localis not running. - If issue persists, please join our Discord and ask for help in the

#msty-app-helpchannel. Make sure to include your OS, GPU, Msty version, and the model you are trying to use.