Set Safety Settings When Using Gemini Models

Learn how to set safety settings when using Gemini models in Msty

If you're looking for the Msty Studio documentation instead, you can find it here: Go to Msty Studio Docs →

Learn more about Msty Studio at Msty.ai →

Gemini's safety settings are designed to control the AI's output, ensuring it remains appropriate and safe for various use cases. These settings help filter out potentially harmful, offensive, or inappropriate content. The Gemini API offers adjustable safety settings to manage content based on predefined categories and sensitive topics. The safety settings can be adjusted to fit different applications, from more restrictive settings for general audiences to less restrictive for specialized use cases or adult content.

Safety Categories

Gemini offers several harm categories, including but not limited to:

- HARM_CATEGORY_UNSPECIFIED

- HARM_CATEGORY_HATE_SPEECH

- HARM_CATEGORY_SEXUALLY_EXPLICIT

- HARM_CATEGORY_HARASSMENT

- HARM_CATEGORY_DANGEROUS_CONTENT

Safety Thresholds

For each category, you can set a threshold level:

- BLOCK_NONE: No filtering for this category

- BLOCK_ONLY_HIGH: Blocks only high-risk content

- BLOCK_MEDIUM_AND_ABOVE: Blocks medium and high-risk content

- BLOCK_LOW_AND_ABOVE: Blocks low, medium, and high-risk content

If no safety settings are specified, Gemini uses default settings that aim to provide a balance between openness and safety.

Setting Safety Settings in Msty

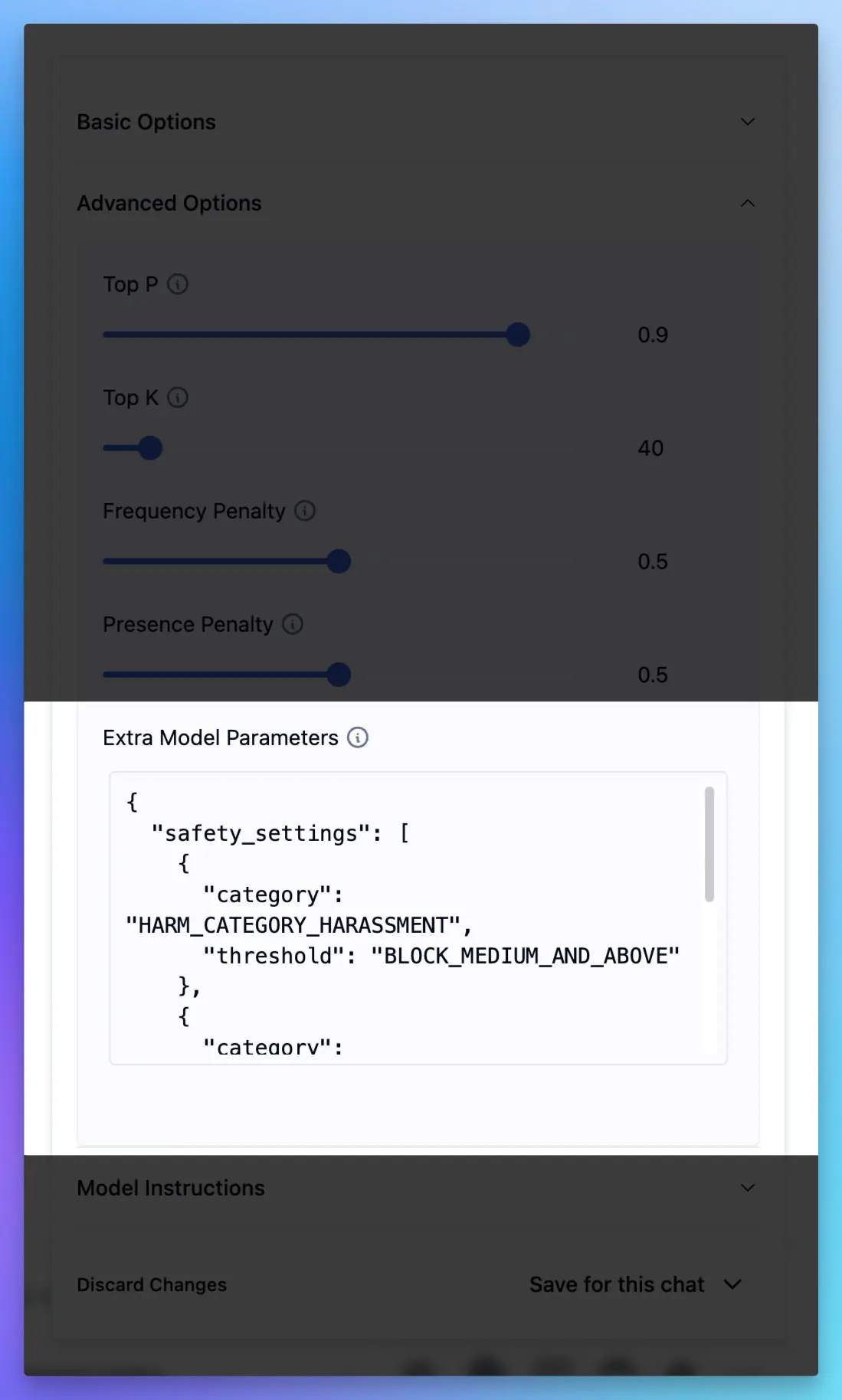

Msty doesn't have a UI to set safety settings for Gemini models. However, you can set safety settings using

the safetySettings parameter as a extra model parameters. Here's an example of how you can set safety settings when

using a Gemini model in Msty:

- Click on Model Options icon next to model selector and below the chat input.

- Open the

Advanced Optionssection and locate theExtra Model Parametersfield at the bottom of the options. - In this field, you'll enter a JSON object to configure the safety settings. Use the following structure:

- Replace "HARM_CATEGORY_NAME" with the specific category you want to adjust.

- Replace "THRESHOLD_LEVEL" with the desired threshold level.

- You can add multiple categories by including additional objects in the "safetySettings" array.

Example:

- Apply the changes and run your model with the new safety settings.

Remember, adjusting these settings can impact the type of content Gemini generates, so use them responsibly and in accordance with your application's needs and ethical guidelines.