Chat with Models from SambaNova

Use Llama 3.1 8B, 70B and 405B models from SambaNova in Msty

If you're looking for the Msty Studio documentation instead, you can find it here: Go to Msty Studio Docs →

Learn more about Msty Studio at Msty.ai →

SambaNova is the only provider to offer Llama 3.1 405B in a free tier, made possible by their efficient chip architecture. Learn how to use their models in Msty.

Demo Video

Watch this video for the corresponding tutorial.

API Key and Endpoint

Go to SambaNova section in the Find API Keys page for more details.

Available Models

The following models are available as of September 17, 2024. The models are available to all tiers, including the free tier.

- Meta-Llama-3.1-8B-Instruct

- Meta-Llama-3.1-70B-Instruct

- Meta-Llama-3.1-405B-Instruct

Using the Models in Msty

Follow the steps below to add and use the SambaNova models in Msty.

Open Settings

Open settings from the sidebar.

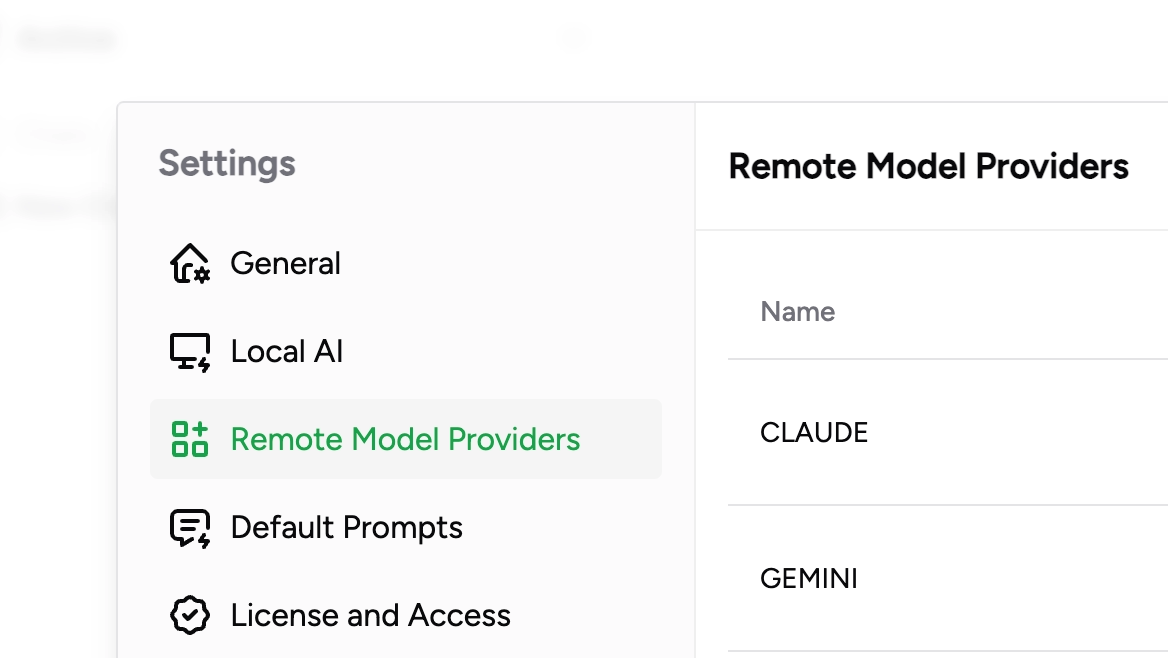

Go to Remote Model Providers

From the options, click Remote Model Providers.

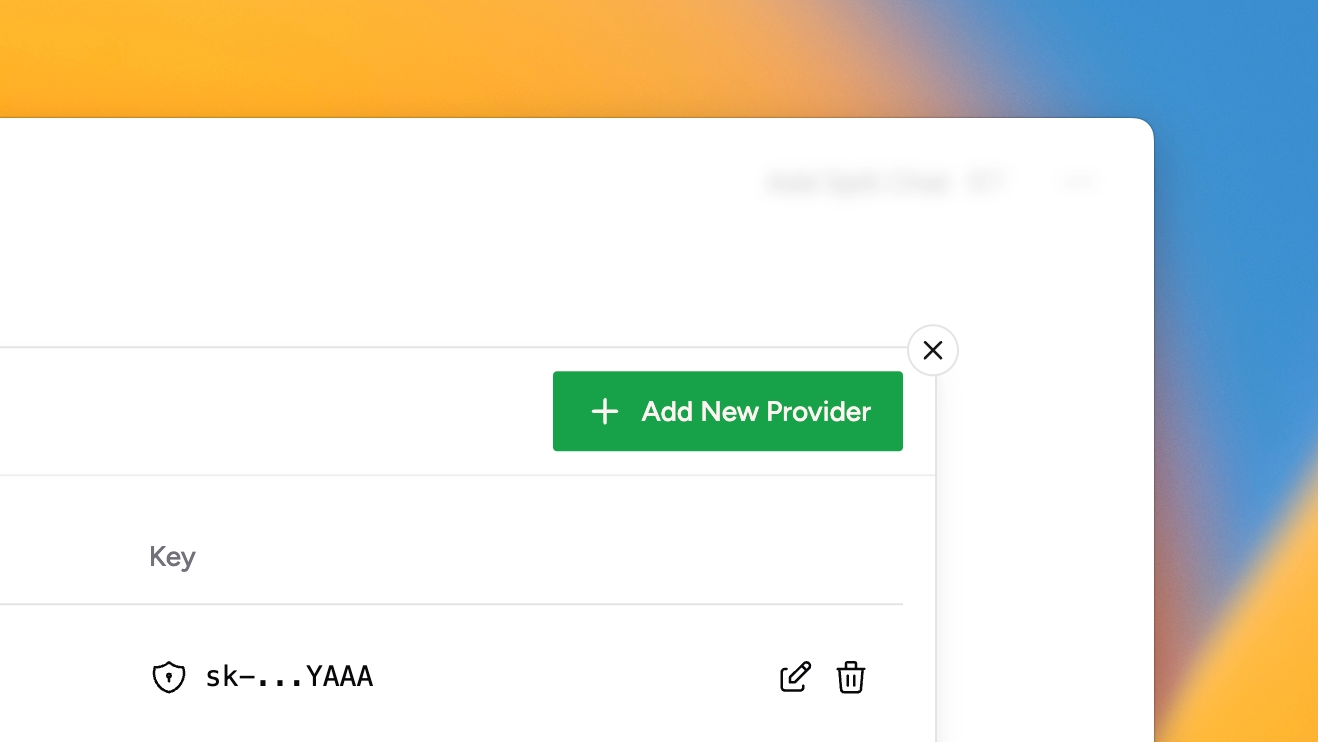

Add New Provider

Click on Add New Provider button from the top right corner.

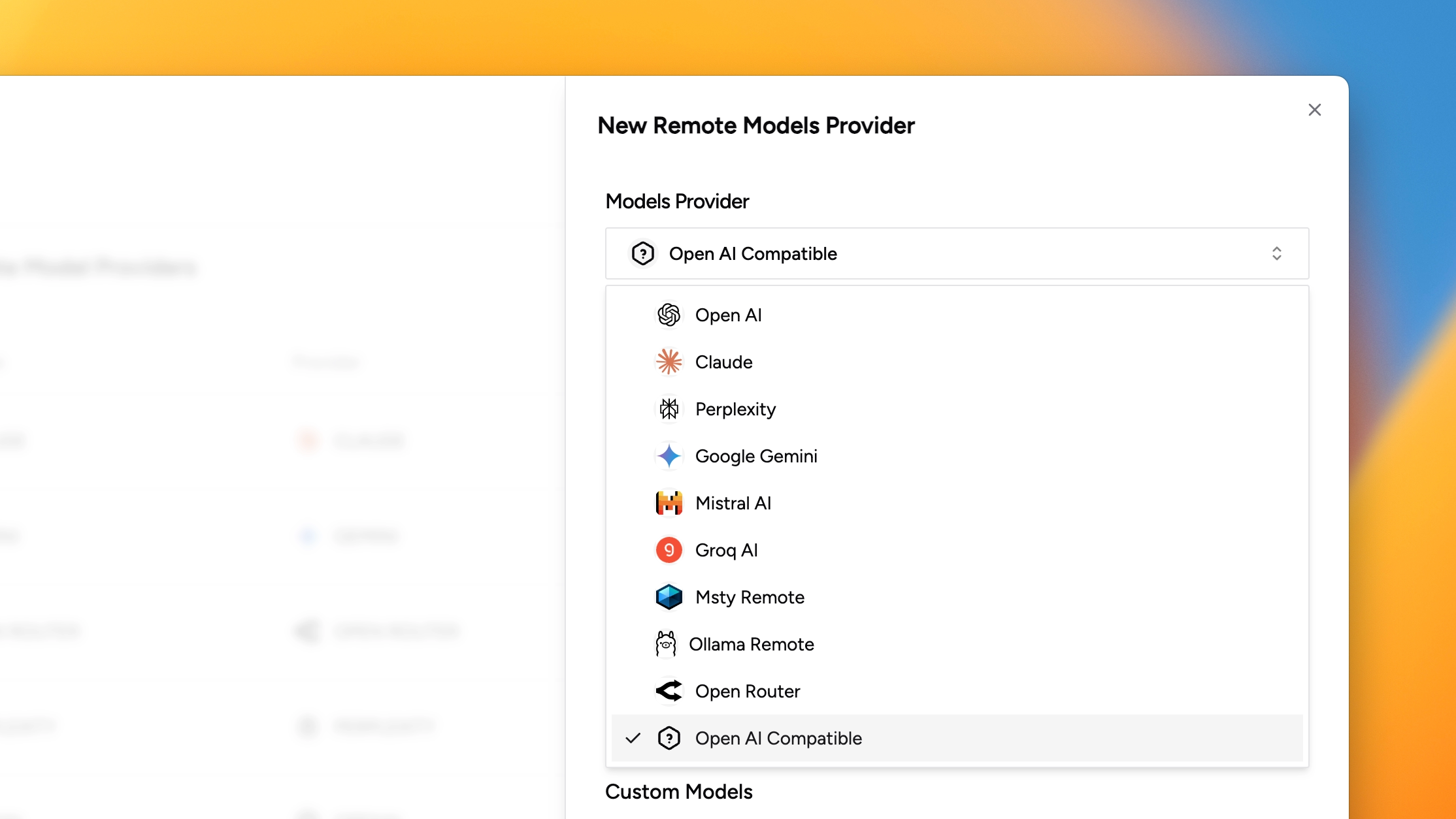

Choose Open AI Compatible Provider

From the Models Provider dropdown, select Open AI Compatible.

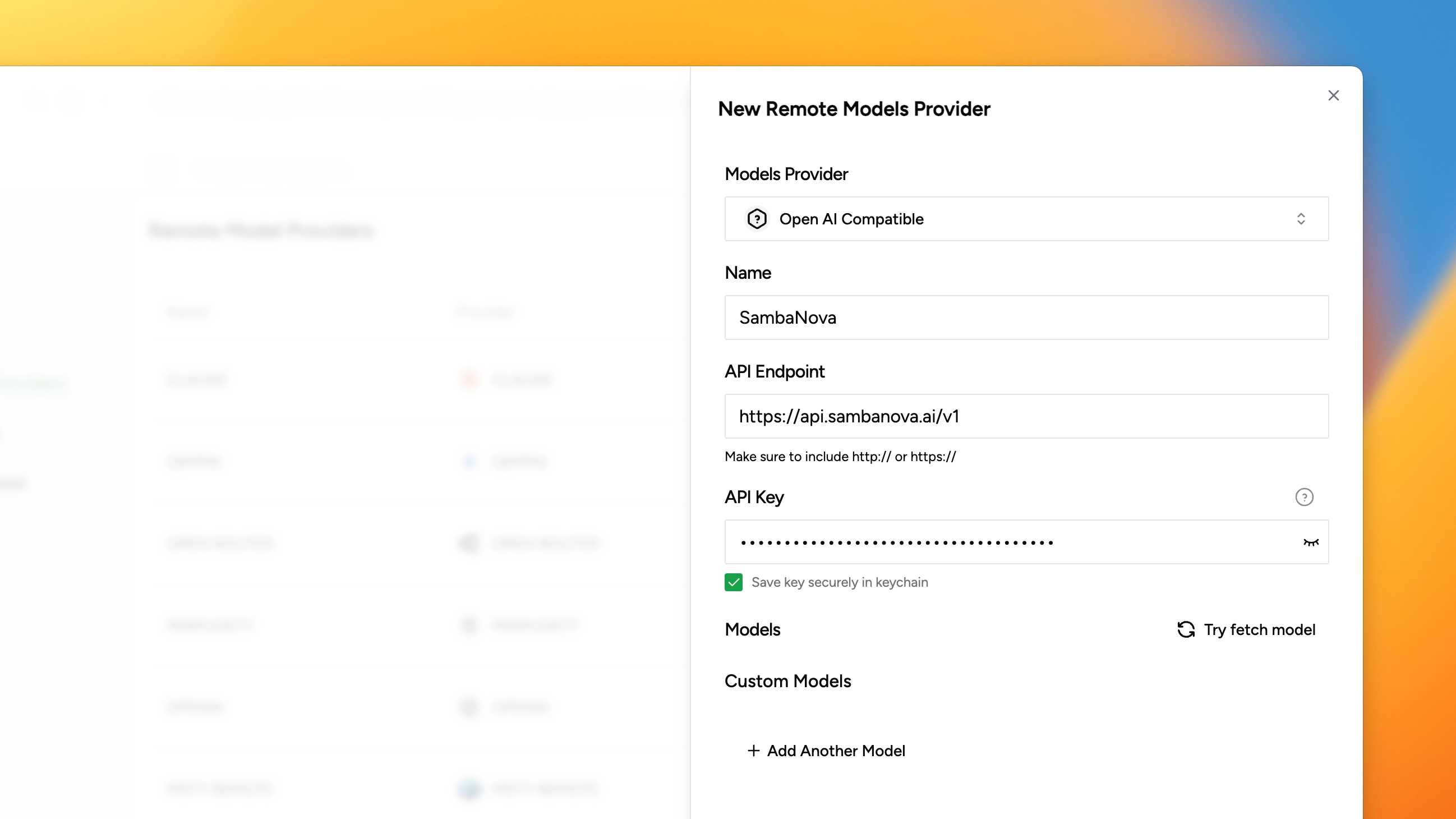

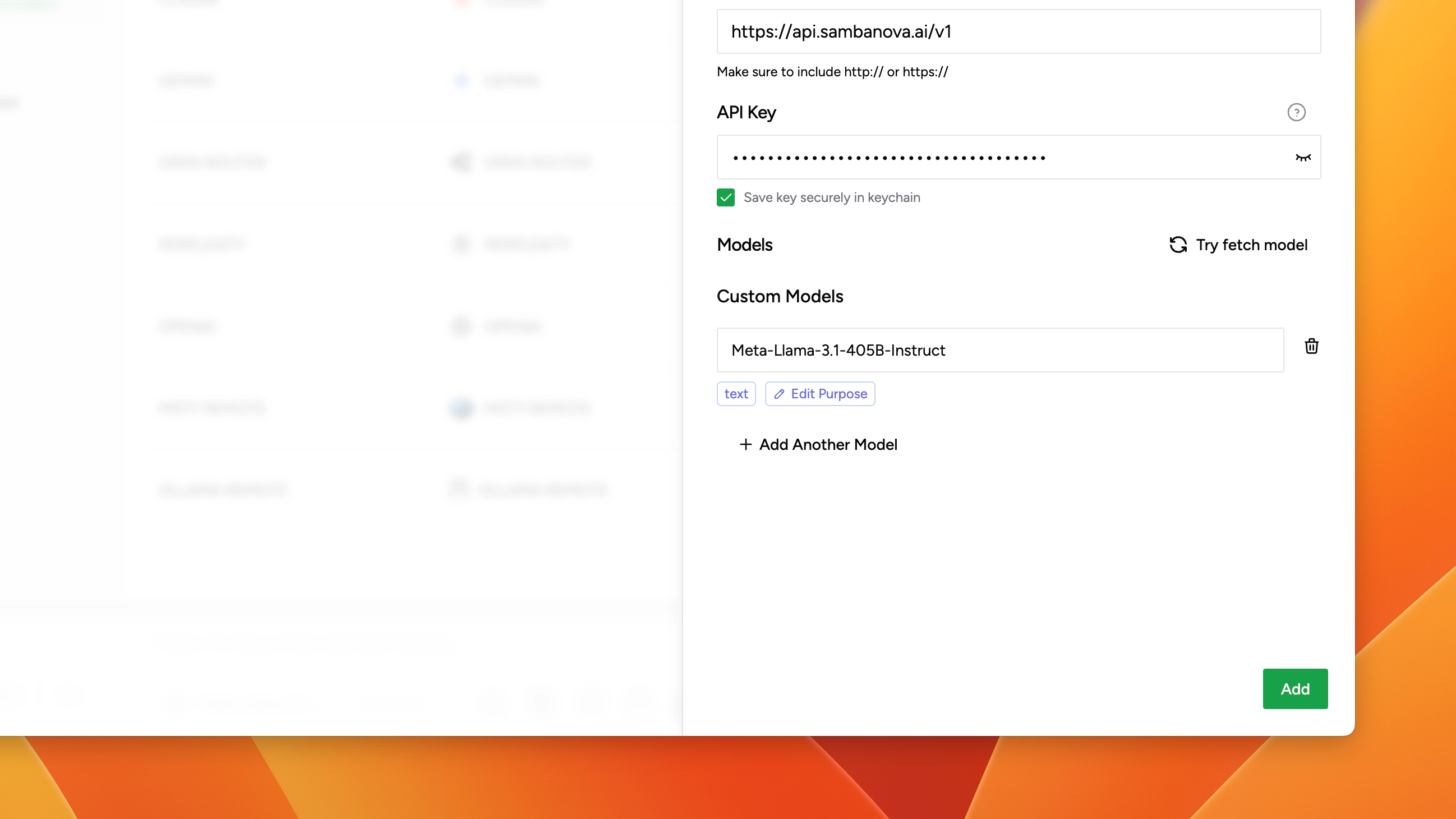

Provide Name, API Endpoint, and API Key

In the input boxes, provide the appropriate values for Name, API Endpoint, and API Key.

- API Endpoint:

https://api.sambanova.ai/v1 - Get your API key from: https://cloud.sambanova.ai/apis

Add a Model

SambaNova doesn't return available models through a /v1/models API endpoint so Msty cannot fetch them automatically. We'll need to manually add the models. You can add more models as necessary.

You can choose to add any or all of the following models:

Meta-Llama-3.1-8B-InstructMeta-Llama-3.1-70B-InstructMeta-Llama-3.1-405B-Instruct

Once you are done adding models, click the Add button on the bottom right.

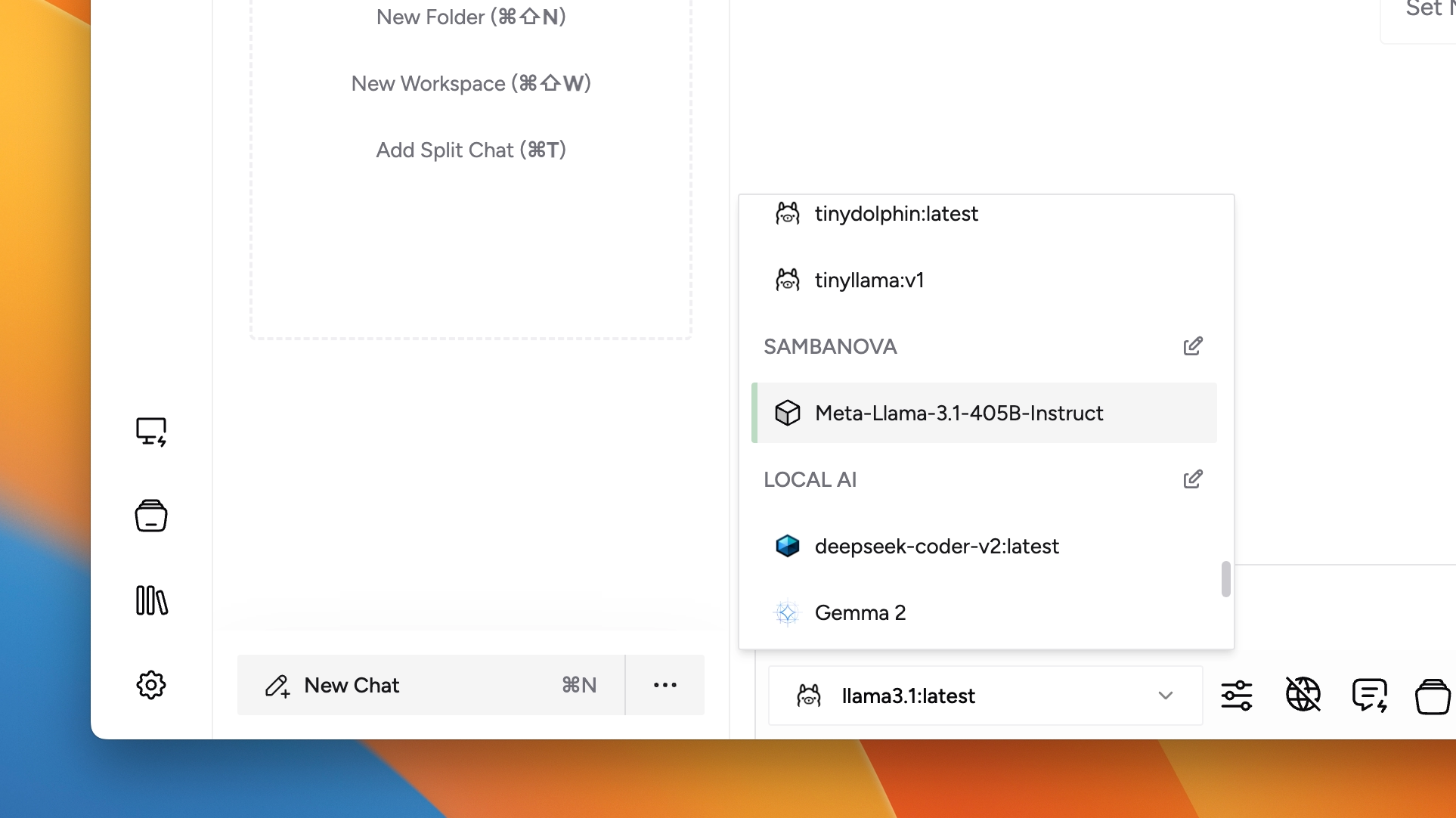

Start Chatting

From the model selector in a new chat, select the model that you just added and start chatting.