Understanding RAG in Knowledge Stacks

Learn how Retrieval Augmented Generation works in Msty

If you're looking for the Msty Studio documentation instead, you can find it here: Go to Msty Studio Docs →

Learn more about Msty Studio at Msty.ai →

What is RAG?

RAG (Retrieval Augmented Generation) is the technology that powers Knowledge Stacks in Msty. It's important to understand that RAG doesn't "train" or "teach" the AI new information - instead, it's more like giving the AI a temporary reference book to consult while answering your questions.

How RAG Works

Think of it like this:

- You: Ask a question about your documents

- Msty:

- Searches your documents for relevant information

- Uses embeddings to find matches

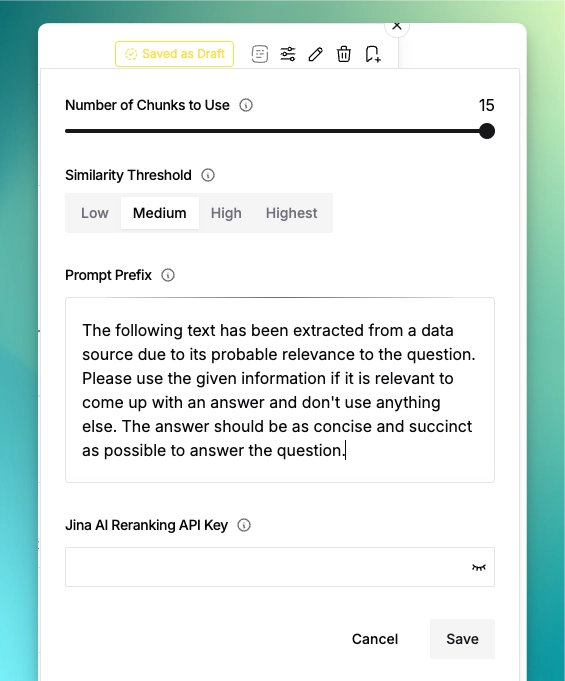

- Selects the best chunks (default: 15)

- AI:

- Receives your question and selected chunks

- Uses this default prompt:

- Generates a focused answer

The AI model itself never learns or remembers your documents. Each time you ask a question, Msty finds the relevant information fresh - like looking up answers in a book each time.

Fine-tuning RAG

Control how RAG works in the chat interface:

- Similarity Threshold:

- Low: Broader context, more results

- Medium: Balanced matching

- High: Strict matching

- Highest: Only exact matches

- Number of Chunks:

- Default: 15 chunks

- More chunks = broader context

- Fewer chunks = focused answers

- Custom Prompt:

- Modify the default prompt

- Guide AI response style

- Maintain answer focus

Pro Tip: You can select multiple Knowledge Stacks at once for cross-referencing information!

Why Use RAG?

- Accuracy:

- References specific facts

- Reduces "hallucinations"

- Provides sourced answers

- Privacy:

- Documents stay local

- Only relevant snippets sent

- Full control over data

- Cost Effective:

- Sends minimal context

- No training needed

- Works with any AI model

Want to optimize your results? Learn about chunk settings and embedding options.